In the dynamic landscape of data processing, real-time Extract, Transform, Load (ETL) has become indispensable for organizations striving to make informed, timely decisions.

Visual Flow, a leading provider of innovative solutions for IT developers, unveils strategies for mastering real-time ETL, empowering organizations to process data efficiently and derive actionable insights.

This comprehensive guide delves into various approaches and best practices for real-time ETL processes, including the importance of data quality management and the integration of advanced analytics to predict trends and behaviors.

Leveraging these strategies, organizations can enhance operational agility and responsiveness. Moreover, by adopting these techniques, businesses can optimize their resource allocation, minimize errors, and increase the overall speed of decision-making processes.

The strategic use of real-time ETL not only strengthens data infrastructure but also equips companies with the tools necessary to thrive in a data-centric world. This practice equips teams to handle unexpected data volumes and fluctuations, ensuring robust performance under varying operational demands.

ETL migration services play a crucial role in this domain, facilitating the seamless transition of data pipelines and workflows between different environments and platforms while ensuring continuity and reliability.

Table of Contents

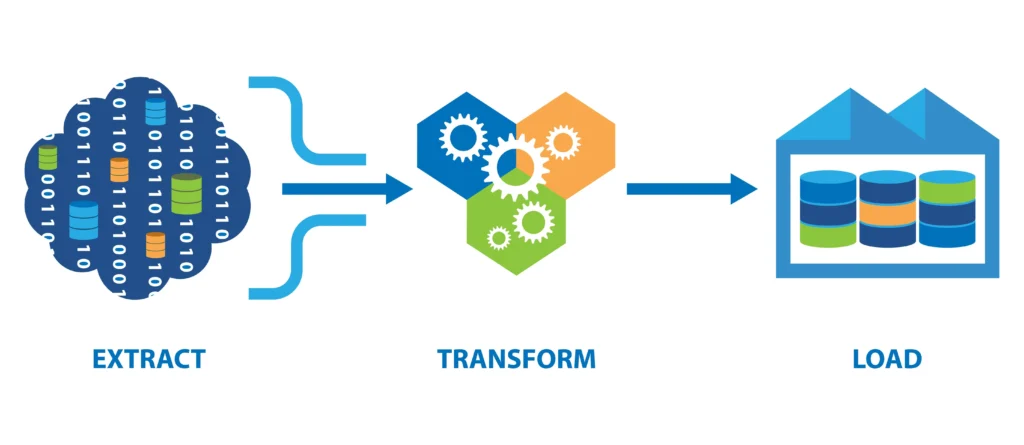

Understanding Real-Time ETL: Key Concepts

Source: datachannel.co

- Streaming Data: Real-time ETL involves processing streaming data continuously as it is generated, enabling organizations to analyze and act on data in near real-time.

- Low Latency: Real-time ETL systems operate with minimal latency, ensuring that data is processed and transformed swiftly to meet the requirements of time-sensitive applications.

- Event-Driven Architecture: Real-time ETL is often built on event-driven architectures, where data processing is triggered by events or changes in the data stream.

- Scalability: Real-time ETL systems are designed to scale horizontally to handle fluctuations in data volume and processing requirements, ensuring consistent performance under varying workloads.

- Fault Tolerance: Real-time ETL solutions incorporate fault-tolerant mechanisms to ensure data integrity and reliability, even in the face of failures or disruptions.

Strategies for Efficient Real-Time ETL

- Use of Stream Processing Frameworks: Leverage stream processing frameworks like Apache Kafka Streams, Apache Flink, or Spark Streaming to process data in real-time with high throughput and low latency.

- Microbatching: Implement microbatching techniques to process small, continuous batches of data, reducing processing overhead and ensuring consistent performance.

- Parallel Processing: Utilize parallel processing techniques to distribute data processing tasks across multiple nodes or cores, maximizing throughput and minimizing processing times.

- Caching and Materialized Views: Employ caching mechanisms and materialized views to store intermediate results and frequently accessed data, reducing latency and improving query performance.

- Optimized Data Pipelines: Design optimized data pipelines with efficient data structures, algorithms, and processing logic to minimize resource utilization and maximize throughput.

- Monitoring and Alerting: Implement comprehensive monitoring and alerting systems to track the performance and health of real-time ETL pipelines, enabling proactive maintenance and troubleshooting.

Challenges and Considerations

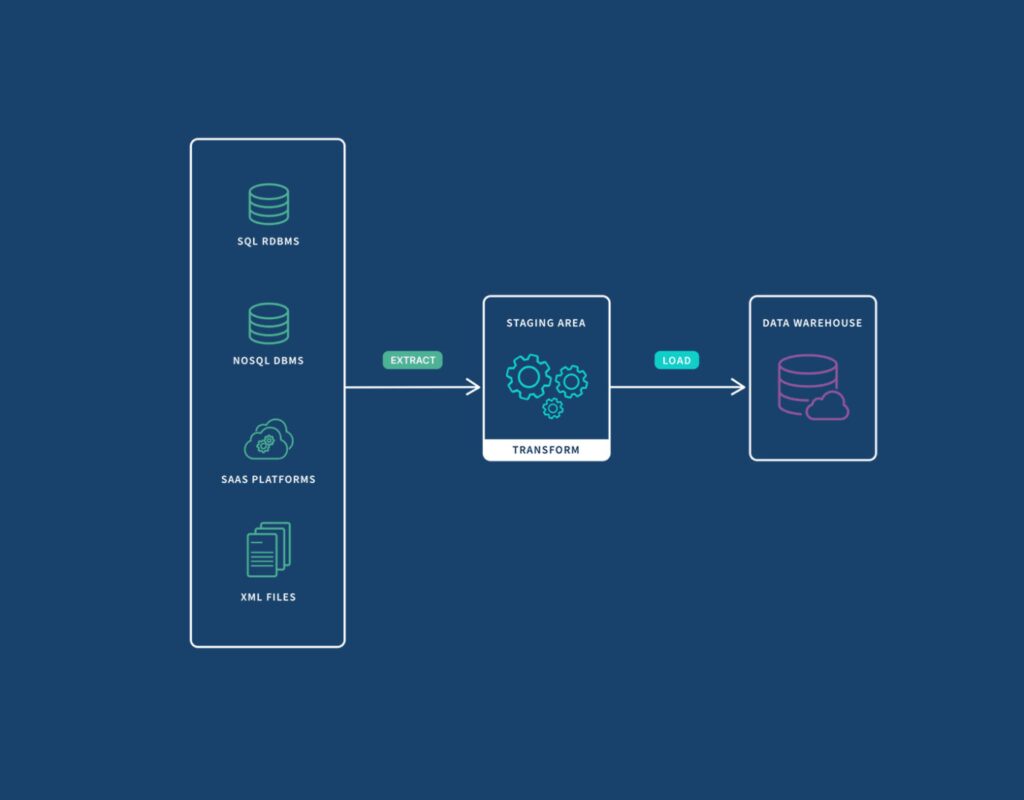

Source: qlik.com

- Data Consistency: Ensuring data consistency and accuracy in real-time ETL pipelines, especially in distributed and parallel processing environments.

- Resource Management: Efficiently managing resources, such as CPU, memory, and network bandwidth, to prevent bottlenecks and optimize performance.

- Scalability: Scaling real-time ETL systems horizontally to accommodate growing data volumes and processing demands while maintaining performance and reliability.

- Data Governance: Enforcing data governance policies and compliance requirements in real-time data processing workflows to ensure data integrity and security.

- Error Handling and Recovery: Implementing robust error handling and recovery mechanisms to handle failures gracefully and minimize data loss or corruption.

Empowering Data Agility with Visual Flow

As organizations embrace the transformative potential of real-time ETL, Visual Flow stands ready to empower IT developers with cutting-edge solutions and expertise. By mastering real-time ETL strategies, organizations can unlock the full potential of their data assets, our company platform provides a comprehensive ecosystem for building and deploying real-time ETL pipelines, with support for popular stream processing frameworks, such as Apache Kafka Streams and Apache Flink.

Its intuitive user interface and visual design tools enable developers to design complex data workflows with ease, reducing development time and effort. Additionally, Visual Flow offers a library of pre-built connectors, transformations, and analytics functions, empowering developers to rapidly prototype and iterate on real-time ETL solutions without reinventing the wheel.

Elevating Data Agility with Real-Time ETL

Source: confluent.io

In conclusion, mastering real-time ETL is essential for organizations seeking to process data efficiently and derive timely insights to drive business decisions. By implementing strategies such as stream processing frameworks, microbatching, and parallel processing, organizations can optimize their real-time ETL pipelines for high throughput, low latency, and fault tolerance.

With our company guidance and expertise, organizations can navigate the complexities of real-time data processing and harness its transformative potential to achieve data agility and innovation. Explore the possibilities of real-time ETL with Visual Flow and elevate your organization’s data capabilities to new heights.